Cortext Engine

Summary

Large language models (LLMs) can be extraordinarily good at understanding and answering our questions, for the first time it appears that a machine can communicate in our language instead of us having to learn a particular domain specific language (DSL) or set of commands. This can be coupled with both speech to text (STT and text to speech (TTS) to make it possible to have an almost natural conversation with a machine. This capability should make it possible for users to interact with new programmes with little or no training because the machine is adapting to the user and not the other way around.

LLMs are rapidly progressing but currently do not model all of the capabilities of the human brain. While this last statement is likely to be true, behind the closed doors of large companies, the lines are almost definitely beginning to get blurred. For those of us who do not have access to the cutting edge and have to rely on the publicly available media for information, this statement currently stands. Therefore, for me it is interesting to compare both the human brain and the LLMs I have access to and list some of the elements that are missing. In this comparison an LLM could be likened to a human’s long-term memory. The LLM has a vast array of facts and information from which to supply the answers, but cannot remember new information without the support of external features such as retrieval augmented generation (RAG). The LLM is also limited in its ability to evaluate the content of questions that it has been asked, or statements that the user makes.

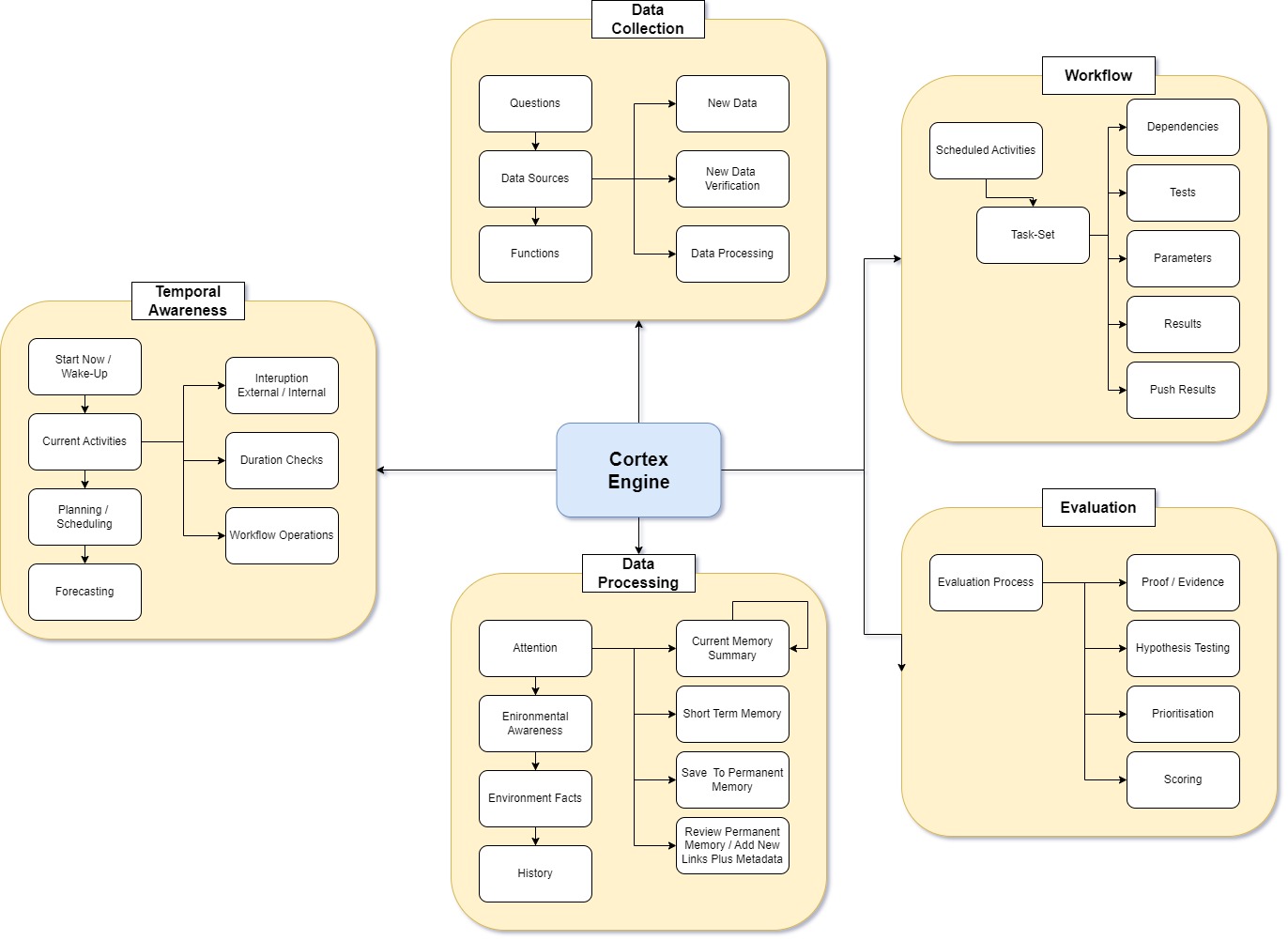

The Cortex Engine is a collection of complementary capabilities that together perform some of the roles that a human would be capable of.

Diagram/Illustration 1.00 Showing additions to an LLM.

The workflow capability of the Cortex Engine can perform multiple roles. While it can act as a regular workflow, able to perform a set of tasks, it can also utilises a library of existing partial workload patterns. An example of an existing pattern is the ability to expand an initial question using common approaches such as finding alternatives, or supporting or disproving theories for any assertion made.

Multi shot prompting of LLMs can produce significantly better results by instructing the LLM on the thought processes involved to produce the answer. One approach would be to ask the LLM where it might find the best data sources for the question that has been presented, to then predict what data it might find in each source, to search each source and finally to evaluate the data it has found against its own predictions. This same technique can be used to dig deeper into existing information. The Cortex Engine’s workflow functionality can be used to find related sources of data for each aspect of an initial answer, then iteratively expand on this new data to form a more comprehensive overall answer. The cortex engines evaluation process can be used across this data to test and hypothesise at each iteration to help develop new leads, it can also help to prioritise and score returned information while searching for relationships and similarities with data already returned. This aspect of comparing answers across multiple data sources, as well as score and the answers, reduces the tendency to hallucinate.